Putting yourself out there as a technology soothsayer is risky, but looking to 2017 and beyond, I’m excited. For the first time, I feel like true computer-aided design (CAD) and computer-aided manufacturing (CAM) will be a reality in my lifetime. Up until now, computers never participated in people’s thought processes. They just patiently waited for instructions—never pushing people beyond their creative boundaries.

But that’s changing. Here are five design and technology predictions to look forward to.

1. Virtual reality (VR) will make a big impact on the construction industry. VR as an aid to architects is a growing trend, but the most profound effect may be on construction. VR gives construction professionals a more faithful representation than what they typically get on text-based schedules (like Gantt charts) and 3D graphical data (like BIM models).

With VR, general contractors will virtually walk onto a job site and see what it will look like the following week. Once immersed in the data, they will be able to point out issues, resolve differences, and coordinate changes before the future site is real. Workers will also be able to do practice runs.

The implications of transporting construction managers and workers into a different way of relating to their data will be massive savings of time and money, as well as prevention of mistakes and accidents.

2. Machine learning will take product-design creativity to a new level. The acceleration of machine learning is exponential. The same way that scientists have stimulated the human brain in a synesthetic sense to trigger false memories, it’s possible to push ‘neurons’ inside software to discover objects that have never been invented.

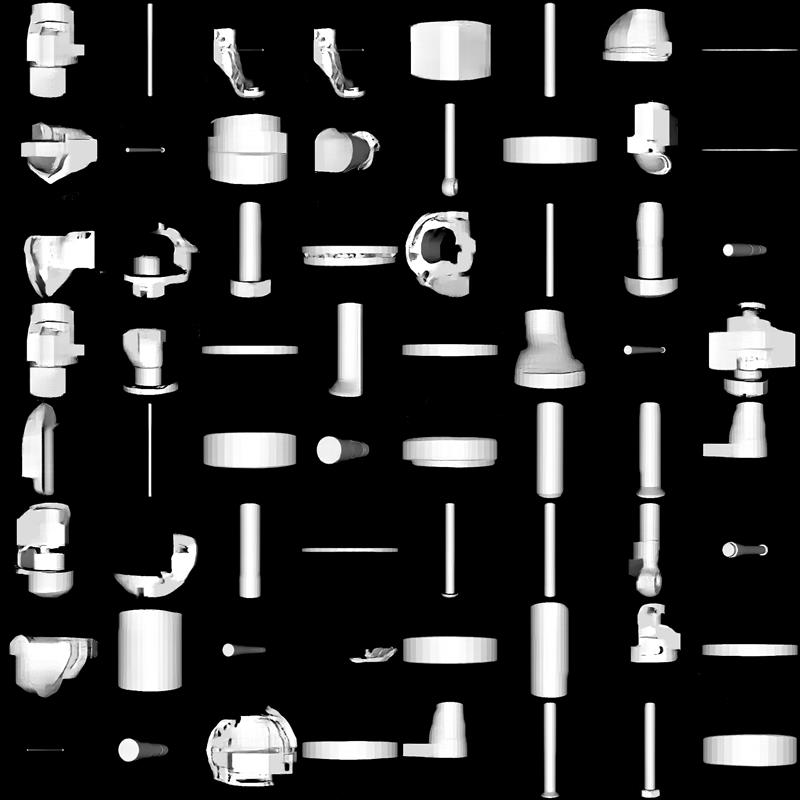

An example is Autodesk’s Design Graph project, which mines massive amounts of data, discerns relationships among parts (such as gears, bolts, and screws), groups like things together by shape, and makes relevant recommendations. As this system was trained, it developed recognition similar to the way the human brain works. The same way that people can differentiate between a dog and a cat, that cognition exists inside the software.

Say I’m going to stimulate a set of neurons to make a chair and another set to make an airplane: I can slide between the two, stimulate a ‘false memory’, and watch the object morph between chair and plane. What’s interesting isn’t so much those morphologically dissimilar examples. It’s looking in the neighbourhood of objects that already exist and finding the unchartered spaces in between—which might point to new product opportunities.

Novel 3D catalog items for Autodesk Inventor generated by an AI. Courtesy Autodesk.

3. Sensing robots will make manufacturing faster and more accurate. Consumer Internet of Things devices can be ridiculously overwrought. Why do you need a toaster connected to your smartphone when a $35 Black+Decker will do just fine? In the near future, IoT will make a bigger impact in industrial robotics.

Up until now, robots have been completely blind, executing the same rote-style interactions—regardless of people, other robots, or the work piece in front of them. Going forward, manufacturing robots need to be more flexible and adaptable to different situations.

Important work is being done today with sensing robots to help them see their surroundings and alter their programs to avoid repeating the same mistakes. The Autodesk Applied Research Lab is also working on a large-scale additive robot with goals rather than tasks. At every moment while it’s printing, it measures how well it’s accomplishing the goal and can course correct.

Another robot at the lab can pick up a novel thing it hasn’t experienced before, form an ‘opinion’, and determine the most grippable part of the object. If it fails, it learns from its mistakes. The next step could be to replace the eye of the robot with a rendered scene—instead of the robot using its literal camera eyes. We could give it a Lego brick on a park bench, among other Lego bricks, or a on a cat.

Operating with the computer and the machine-learning system, the robot imagines these different scenarios, so it doesn’t have to act them out in the real world. It’s possible to do these training scenarios in parallel and at scale, so once the robot learns to pick up a Lego, it can accelerate through an offline process to learn tens of millions of different objects simultaneously. Then it’ll know how to pick up all things.

4. Generative design and simulation will predict manufacturability. Generative design is like turning the game Battleship around and showing you where all the ships are. With simulation and generative design, you will be able to see all of your options, and all of them will be manufacturable because they are vetted by the computer first.

For example, Airbus used generative design to create an airplane partition (which separates crew from passengers) that is 45% lighter than conventional partitions. Of the 10,000-plus design options created through generative design, the Airbus team chose a couple to test comprehensively using simulation software. The result was a structurally sound yet lightweight partition that could be printed by three additive-manufacturing systems—without fail.

The process didn’t just save time and money. If the thousands of new A320 planes currently on order have these partitions installed, it could cut CO2 emissions by hundreds of thousands of metric tons every year.

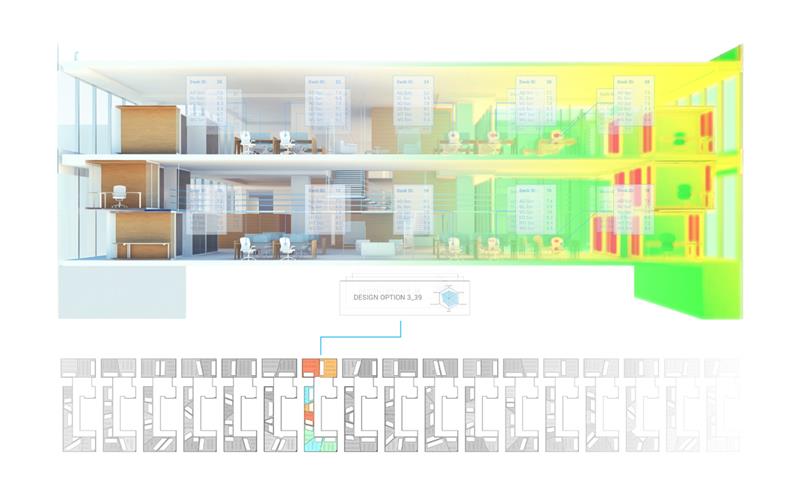

Generative design floor-plan options for the MaRS building in Toronto. Courtesy Autodesk.

Generative design floor-plan options for the MaRS building in Toronto. Courtesy Autodesk.

5. Crowdsourced data and generative design will create happier work places. Generative design is first going to hit in the manufacturing industry, where the cycle times are short from inception to product. But there’s space for architects to use generative design to explore goals, constraints, and outcomes.

An example of this is what University of Toronto and Autodesk did with the MaRS building, surveying employees to understand their needs for collaboration, daylight, privacy, and more. Based on that data, the generative-design tool created multiple plans among thousands of configurations.

Building orientation, fenestration and shading, and number of floors: Those things have all been explored. But what improves employee productivity inside a building? The answer lies in mapping the survey data showing human preferences (which vary from person to person) with the calculations performed in the computer.

That means we’re investigating a highly dynamic situation with multiple objectives, and that’s where the techniques inside generative design do best relative to the traditional, “Let’s pick the first thing that works” scenario.

This article originally appeared on Autodesk’s Redshift, a site dedicated to inspiring designers, engineers, builders, and makers.

Author profile:

Jeff Kowalski is senior vice president and chief technology officer at Autodesk