He believes that the equipment that comes with VR systems like gloves and headsets isolate the user from those they are working with and will be a major barrier to adoption.

Dr Plasencia says: “With VR you can access the 3D information, but you are giving up on the world around you.

“What we want to do is have an intuitive way of interacting and visualising but use it like your phone –your phone is something you carry with you and when you need to use it you do, when you don’t you don’t. VR has never been that easy. If you want VR you need to get a headset and the glasses. On our platform we want something where you can just use the device when you need it.”

MistForm is the name of the system that Dr Plasencia has created, a 39-inch, mid-air, fog screen display that allows users to reach through it and interact with 2D and 3D objects.

While shape-changing displays and fog screens already exist in labs, Dr Plasencia says this is the first time the two technologies have been combined. Unlike a standard projector that achieves uniform brightness regardless of the user’s position, fog displays scatter light unevenly and in different directions, meaning that a pixel will appear bright when standing in front of the projector, but dim when viewed from the side.

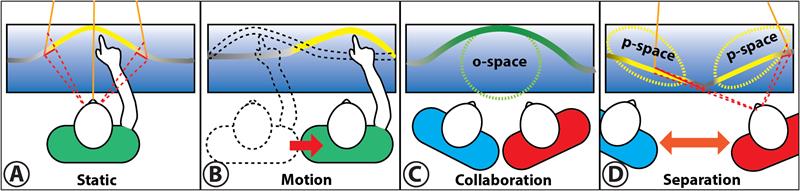

Sensors are used to distinguish how many - and where - users are and drive actuators that move the fog screen for the most comfortable view

Sensors are used to distinguish how many - and where - users are and drive actuators that move the fog screen for the most comfortable view

MistForm addresses this problem by using sensors, linear actuators and a shape regression model to control the shape of the screen depending on the position of the user, removing this image distortion. Fog particles are released through a flexible pipe to form the display, which is stabilised by curtains of air, blown by a series of fans. The system uses the Microsoft Kinect motion-sensing platform linked to an OptiTrack 3D system, which detects the position of the user and their hands and drives the actuators to adjust the screen and have the display react to those hand movements.

Dr Plasencia says: “With other 3D display technologies your eyes need to focus on the display surface. Even if you see an object ‘popping out’ of the screen, if you then try to touch it, your eyes will need to focus either on your hand or on the display, which soon can lead to eye fatigue. MistForm can adapt to these scenarios, moving the display surface so that both the object and the hand remain comfortably visible. With this kind of technique, we can provide comfortable direct hand 3D interaction in all the range your arms can reach.”

Dr Plasencia says: “With other 3D display technologies your eyes need to focus on the display surface. Even if you see an object ‘popping out’ of the screen, if you then try to touch it, your eyes will need to focus either on your hand or on the display, which soon can lead to eye fatigue. MistForm can adapt to these scenarios, moving the display surface so that both the object and the hand remain comfortably visible. With this kind of technique, we can provide comfortable direct hand 3D interaction in all the range your arms can reach.”

The screen can move towards and away from the user and can bend into numerous different shapes, within a range of 7-inches. Adapting to the user, rather than the other way around, the display can curve around two collaborators, providing optimum visibility for both people, or it can take on a triangular shape if those two people need to work on different areas of the screen independently.

This is another major benefit of MistForm over VR for designers in a collaborative environment. If a colleague in the same room sees they can add input to the design it can be done on the fly, without having to interrupt the first designer who would otherwise be immersed and isolated by a VR headset.

When it comes to editing text, or making precise changes to CAD models, MistForm isn’t going to be replacing PC screens any time soon, Dr Plasencia explains: “But, if you want to be more creative, explorative, using your hands and seeing the results that arrive and not having to think about the technology and commands it can be very positive.”

Combatting VR eye strain

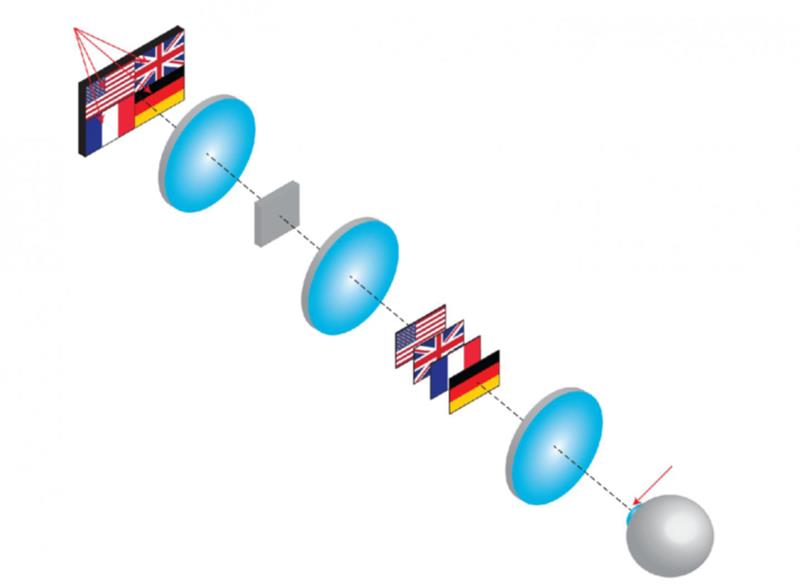

Most current 3D VR/AR displays present two images that the viewer’s brain uses to construct an impression of the 3D scene. This stereoscopic display method can cause eye fatigue, discomfort and even motion sickness because of a problem called the vergence-accomodation conflict. The two images that make up stereoscopic 3D image are displayed on a single surface that is the same distance from your eyes. But these images are slightly offset to create the 3D effect. Your eyes then have to work differently than usual, converging to a distance that seems further away, but keeping your lenses focused on the image that is actually just centimetres from your face. Researchers at the University of Illinois at Urbana-Champaign’s Intelligent Optics Lab are developing a new optical mapping 3D display that makes VR viewing more comfortable. To overcome these limitations, the researchers’ display method divides the digital display into subpanels. A spatial multiplexing unit (SMU) shifts these subpanel images to different depths with correct focus cues for depth perception. But unlike the offset images from the stereoscopic method, the SMU also aligns the centres of the images to the optical access. An algorithm blends the images together, making a seamless image. “In the future, we want to replace the spatial light modulators with another optical component such as a volume holography grating,” explains lead researcher, prof Liang Gao. “In addition to being smaller, these gratings don’t actively consume power, which would make our device even more compact and increase its suitability for VR headsets or AR glasses.” |