Evan Atherton, senior research engineer, Autodesk Robotics Lab, explains: “What they were doing was so exciting that Google acquired them. Shortly after the acquisition, Bot & Dolly went dark – because Google – and with them so did their tool. So, even though they built this super awesome tool, nobody had access to it anymore.”

The work Bot & Dolly did inspired Atherton to create his own similar tool, but he soon found that he wasn’t the only one attempting this.

“The problem was we were all building the same tool,” he says. “One of the major trends that we found in our research, is that people keep building the same robotics tools over and over and over, not because they want to but because they lack access to tools that have already been created.”

To fight this trend, Atherton designed and released Mimic, a free, open-source software that provides intuitive animation-based tools in Autodesk Maya for programming industrial robots. This gives access to anyone who wants to use Mimic and through it the animation tools contained within Maya, the opportunity to build on it, modify it and contribute back to help other users of the software.

To fight this trend, Atherton designed and released Mimic, a free, open-source software that provides intuitive animation-based tools in Autodesk Maya for programming industrial robots. This gives access to anyone who wants to use Mimic and through it the animation tools contained within Maya, the opportunity to build on it, modify it and contribute back to help other users of the software.

“There are more people who can animate on Maya than are trained as professional roboticists,” Atherton says. “If you take a 15-minute YouTube tutorial on Maya, you can programme a robot.”

One project that made use of Mimic was headed up by Landis Fields from Industrial Light & Magic. Fields wanted to shoot a chase sequence using a physical model spaceship for a series he is developing at the studio. For the dynamic movement he wanted to create two robots were used, one to hold and move the model and another to hold and move the camera.

Intuitive programming

Fields had no prior experience with programming industrial robots, but Mimic allowed him to create a virtual camera to visualise the movements of the model and input keyframe animation, enter specific positions the model and the camera needed to hit while moving and the time intervals between these. Mimic then interpolates the movements required to recreate this animatic, allowing the user to edit out moments where the model leaves the frame, crashes into the camera, etc. All this information is then exported as a program for the robots to follow and hours later the scene can be filmed.

Atherton says: “In a world where it can take weeks or months to programme a single robot in a factory cell, the fact that Landis – who has never touched a robot – programmed two robots to work together to do something this complex overnight is insane. It probably took us longer to wire the LEDs into the model ship than it did for us to programme the robots.”

Atherton says: “In a world where it can take weeks or months to programme a single robot in a factory cell, the fact that Landis – who has never touched a robot – programmed two robots to work together to do something this complex overnight is insane. It probably took us longer to wire the LEDs into the model ship than it did for us to programme the robots.”

This deviates from the way those in industry currently programme their robots by painstakingly entering lines of code that correspond to movements to specific locations, carrying out a task, moving to another specific space and repeat.

To combat this in industry, Autodesk’s Robotics Lab is looking for ways for users to better interact with their robots to accomplish their goals. To this end Heather Kerrick, senior research engineer at the Autodesk Robotics Lab in San Francisco has been working with colleagues in Autodesk’s Technology Centre in Birmingham which opened in early 2018.

The collaboration has developed a simple interface that allows text commands to be sent through an app called ‘Slack’ and Forge, Autodesk’s cloud-based developer, to the robot which then carries out tasks such as cutting and milling.

“Forge makes a tool path, gives you a preview, you can say ‘yes’ or ‘no’, then say ‘please send this to the robot’ and the robot runs the milling operation,” explains Kerrick. “The operator didn’t need to set the whole robot up, they’re now able to accomplish the task they need to accomplish, which is dealing with the STL and approving what the robot is doing. We think that enabling these kinds of ways to talk to your machines will be part of the future of making and part of how automation can scale.”

“Forge makes a tool path, gives you a preview, you can say ‘yes’ or ‘no’, then say ‘please send this to the robot’ and the robot runs the milling operation,” explains Kerrick. “The operator didn’t need to set the whole robot up, they’re now able to accomplish the task they need to accomplish, which is dealing with the STL and approving what the robot is doing. We think that enabling these kinds of ways to talk to your machines will be part of the future of making and part of how automation can scale.”

The team at the Birmingham facility even installed a camera into the robot’s cell so, after the milling is completed, the robot can hold the part up for visual inspection, meaning the operator doesn’t even have to be in the same building.

“We’re trying to find future ways of working with robots, it’s hard when those future tools don’t exist yet,” Kerrick says. “I’ve been using Mimic as a project environment, as a proxy, for creating other software workflows.

Assembly

“The project that I’ve been working on is ‘CIRA’ (CAD Informed Robotic Assembly), the idea is that assemblies already have a ton of information baked into them about how the different parts relate to one another. Exploded assemblies are often used as actual assembly instructions, yet they’re not really machine-readable, there’s a lot of information that gets lost in translation between all the painstaking work that goes into putting your assembly together, putting in constraints, putting in joints and then getting it to a factory line that actually puts those parts together. Their needs to be some sort of intermediary that can read the assembly and then turn that into individual bits of machine instruction.”

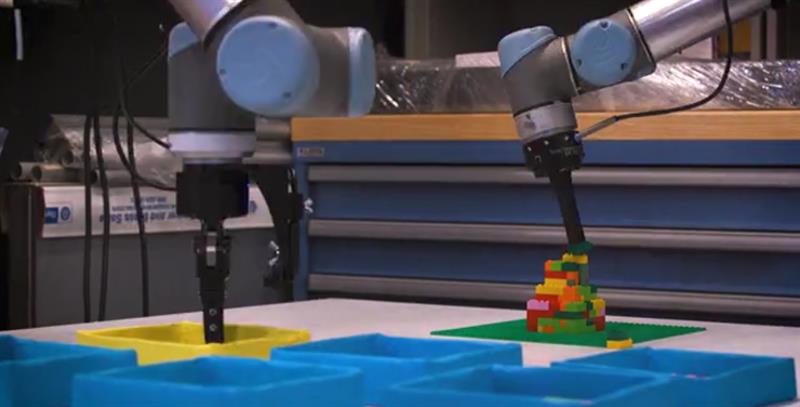

The goal of the CIRA project is to try to pull as much information out of an existing assembly while minimising engagement with the robot to simplify the task. To do this, Kerrick used CAD data from a Lego car, exploded all the parts and figured out the best way in which they would have to be placed back together by the robots, one with a gripper – to pick and place – and one with a plunger – to press the pieces together.

“That’s the kind of thing that shows up a lot in assemblies,” Kerrick explains. “One bolt might block access to another so, that first one needs to be put in in a very particular order. That’s the kind of information that the designer of that part may know and needs to convey to whoever’s going to be driving the machinery.”

Still, the easiest way to get a robot to move to a particular place is to give it a very precise location. She created a fixture plate with each item of the Lego car in pre-determined locations on the plate. Using Mimic she ran the explosion of the pieces from a fully-built car to the individual components in the fixture plate in reverse to create assembly instructions showing the car building itself. This was fed into Mimic which describes to the robots the exact distances the pieces must travel to their final location and click into place.

What’s more, because this application is based around measurements defined by the fixture plate, the plate can be moved anywhere within the reach of the robots and they will still be able to carry out the assembly.

Building a car from Lego is obviously just a demonstration of Kerrick’s early stage research with CIRA, but it signals the potential major consequences in industrial applications where robots are currently fixed and work in precisely programmed locations. Not too far in the future Industry 4.0, smart factories and mass customisation will require robots to work more flexibly.

Collaboration

“Maya and Mimic are great prototyping tools for exploring this larger workflow, especially when it comes to where the robots are in a factory environment,” Kerrick says. “What I’m really excited about for CIRA 2.0 is giving the robot a higher-level command, like ‘please go pick up that part’ and the robot will go to a region it knows that part is, use a camera to see that part and grasp it.

“Maya and Mimic are great prototyping tools for exploring this larger workflow, especially when it comes to where the robots are in a factory environment,” Kerrick says. “What I’m really excited about for CIRA 2.0 is giving the robot a higher-level command, like ‘please go pick up that part’ and the robot will go to a region it knows that part is, use a camera to see that part and grasp it.

“Evan and I are working together to merge our two projects. It’s still early stages, but we’re really excited about the potential future for making robots easier for people to control and making it so you can ask higher-level questions as opposed to get into the nitty gritty of very particular turning angles and locations in 3D space.”

Atherton concluded: “There’s been a ton of anxiety lately about robots and automation displacing humans, much of which is well-founded. But we’re working towards a brighter future where a new breed of technically-literate creatives have access to intuitive design tools that enable them to do more than they were capable of doing before, to better express their creativity and to do it all with less technical barriers.

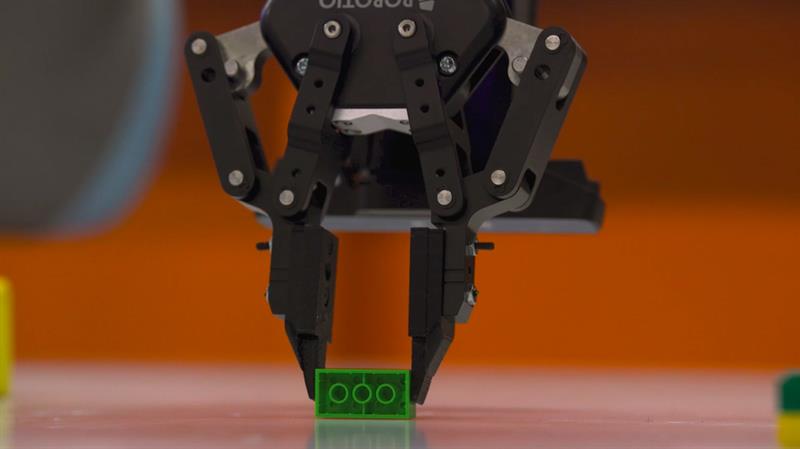

Brick by brick Another Autodesk project, based at the San Francisco AI Lab, developing AI in industrial robots is ‘BrickBot’. Led by Autodesk’s head of machine intelligence, Mike Haley, BrickBot can build a tower from different sized Lego bricks with no programming. Again, this application is at a very early stage and isn’t the intended end-use, but industrial robotics is one of the biggest segments that’s going to see a huge impact from AI. Haley says: “The irony of this is that as we’ve looked at things like generative design and techniques to make design more efficient, manufacturing hasn’t become more efficient. So, great, we can design things 10 times faster, but we have to still make them at the same pace as we did before.” Haley uses the example of a car manufacturing plant. Generally, one model of car is produced on each production line because it would be too expensive to reconfigure the line every time a new model was designed or sold.

“So, how could we use digital information to make factories adaptable, instead of just the stupid process over and over again. Wherever a component is lying the robot can look for it, find it, pick it up and do whatever it needs to do with it. In order to do that the robot needs to sense its environment, it needs to understand its environment and it needs to understand what to do. We can’t program robots constantly to do that, so we have to have a learning system.” This is the reason Lego bricks have been used to teach the robot how to build. First, there are many different sized bricks that the robot must learn the shape of and there are many ways in which they could be oriented and combined to produce the final model. Second, Lego bricks might be easy for us to put together, even as children, but for robots it’s very difficult as they have a tolerance of 0.1mm when being placed directly together.

“Let’s take this away from Lego,” says Haley. “Imagine you’re a designer in a manufacturing company: You’ve finished your design, it’s been approved, you’ve checked it in, it goes into the system, you go home. That digital model gets loaded out of the system, put in a simulator, the AI starts training against it overnight in the cloud. By the next morning the model has been updated with the understanding of how to work with your component, all the robots in the factory are now ready to go with the thing you designed just the night before. It’s that kind of turnaround where AIs can now adapt to new digital models, that can learn quickly.” The BrickBot project is about to be tested by a manufacturer and a construction company with items more complex than Lego bricks. Haley says it’s likely to be commercialised in less than 10 years. |

“Today, most factories are dumb, they’re predicated on making things in very large quantities in a very predictable way,” He says. “Everything’s always in exactly the same place and the same thing is going to be done over and over and over again. If you change the design, all of a sudden those repeatable processes get broken and you have to reconfigure them.

“Today, most factories are dumb, they’re predicated on making things in very large quantities in a very predictable way,” He says. “Everything’s always in exactly the same place and the same thing is going to be done over and over and over again. If you change the design, all of a sudden those repeatable processes get broken and you have to reconfigure them. The robots, a UR10 and a UR5 cobot fitted with cameras and sensors, have been trained only by digital means. Digital simulations of robots grasping bricks can be run in parallel and at incredible speed meaning in just a few hours 10 million attempts can be simulated. And, once learned, the robot never forgets.

The robots, a UR10 and a UR5 cobot fitted with cameras and sensors, have been trained only by digital means. Digital simulations of robots grasping bricks can be run in parallel and at incredible speed meaning in just a few hours 10 million attempts can be simulated. And, once learned, the robot never forgets.